Does how you prompt AI really matter?

I Fell Down the Prompting Rabbit Hole (So You Don't Have To)

Effective prompting is about communicating clearly with AI to get the responses you actually want. The difference between a vague request and a well-crafted prompt can be dramatic (I’ve definitely seen that), but what’s the formula?

For months, I've been rolling my eyes at the endless stream of "prompting hacks" flooding my feeds. You know the ones: "Claim your FREE prompt library!" "These 10 prompts will change your life!" "The SECRET prompt that makes ChatGPT 10x better!"

Look, I'm not anti-prompting. I picked up a few decent examples early on, integrated them into my workflow, and moved on with my life. Job done, right?

But these posts kept multiplying like digital rabbits. Every day, another "prompt engineer" promising the holy grail of AI interactions. Eventually, my skepticism gave way to genuine curiosity: Was I missing something?

Maybe there really is a meaningful difference between a good prompt and a great one. Maybe some techniques actually work better with Claude than ChatGPT. Maybe—just maybe—I'd been leaving performance on the table by treating prompting as an afterthought.

So I did what any self-respecting productivity nerd would do: I went deep. I tested the popular methods, compared results across different models, and tried to separate the signal from the noise.

Here's what I discovered about the art and science of talking to AI—and whether all those "prompting gurus" are onto something real or just selling digital snake oil.

Why Prompts Matter

AI models respond to how you frame your request. They don't inherently know what you're looking for, so the way you ask determines what you get. Clear, specific prompts with proper context consistently produce better results than generic requests.

The Reality Check: What Actually Works

Turns out, there's more signal than noise in the prompting world—but it's not quite as revolutionary as the hype suggests. After testing the main techniques across different models, here's what actually moves the needle:

The techniques aren't magic, but they're measurably better. Few-shot prompting (giving examples) can boost accuracy by 5–30% for classification tasks (Brown et al., 2020). Chain-of-thought reasoning ("think step by step") delivers 10–50% improvements on complex problems (Wei et al., 2022; Kojima et al., 2022). Role instructions genuinely change the tone and depth of responses by 10–30% (OpenAI, n.d.; Anthropic, n.d.). These aren't earth-shattering gains, but they're real.

Different models do respond differently to prompting styles. This was the biggest surprise. ChatGPT loves explicit formatting instructions and detailed role-playing. Claude responds exceptionally well to polite, conversational prompts and step-by-step reasoning. Gemini prefers structured, constraint-heavy prompts for factual tasks. It's not just marketing fluff—these models really do have distinct "personalities" when it comes to prompting.

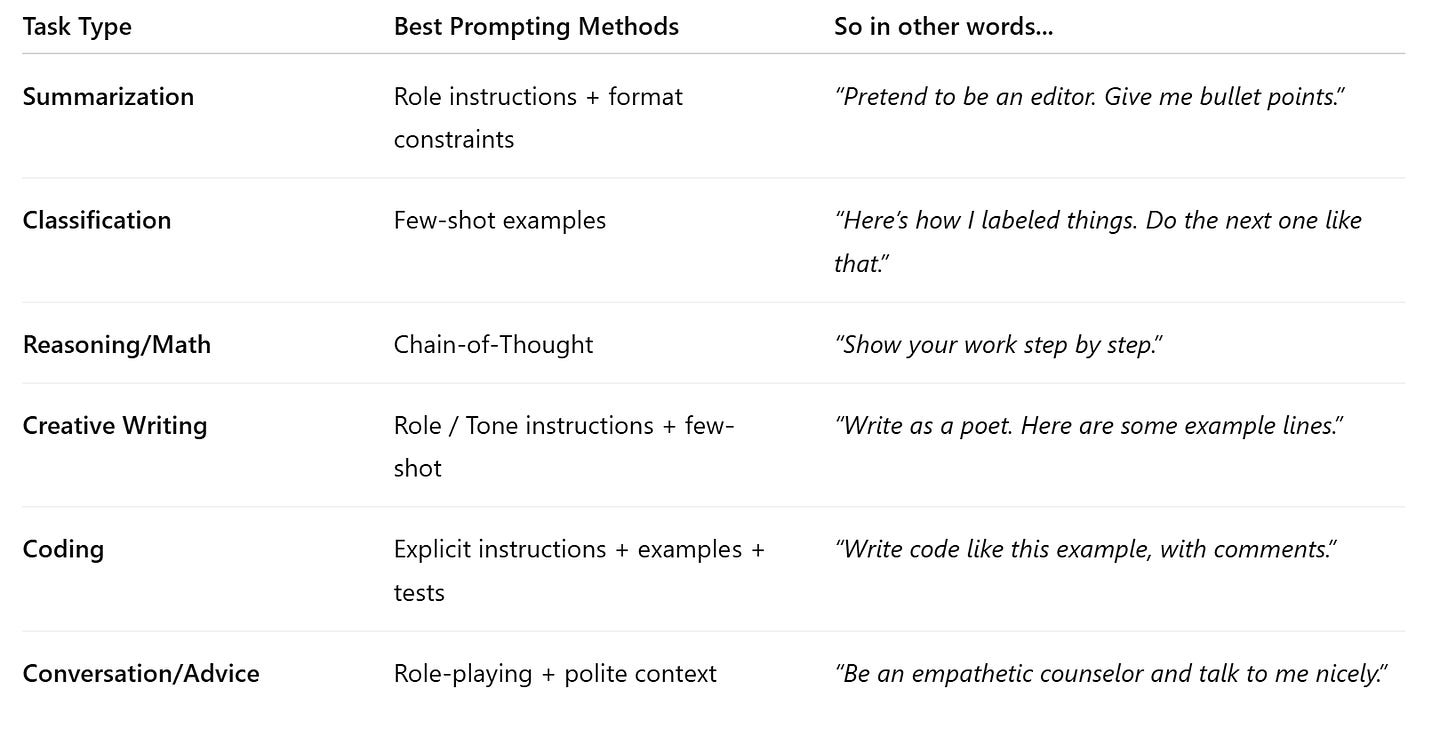

The best approach isn't one technique—it's combining them thoughtfully. Role instructions plus format constraints. Few-shot examples with chain-of-thought reasoning. The magic happens when you layer techniques based on what you're trying to accomplish.

But but but: most people are overthinking it. You don't need a PhD in prompt engineering to get 80% of the benefits. A few simple patterns—being specific about format, giving examples when needed, asking for step-by-step reasoning on complex tasks—will handle most of your use cases.

The real question isn't whether prompting techniques work (they do), but whether the juice is worth the squeeze for your specific workflow. Let me walk you through what I learned, so you can decide for yourself.

Core Prompting Techniques

(Yes they have names!)

Zero-shot Prompting

Simply ask your question directly without providing examples.

Example: "Translate to Spanish: 'Good morning'."

This works well for straightforward tasks where the AI already understands the expected format.

Few-shot Prompting

Provide a few examples to establish the pattern you want the AI to follow.

Example:

Examples:

'Hi' → 'Hola'

'Bye' → 'Adiós'

Now translate: 'Thanks'This helps the AI understand your specific style, format, or approach.

Chain-of-Thought

Ask the AI to work through problems step by step.

Example: "Calculate 23 + 45. Show your reasoning step by step."

Particularly effective for mathematical problems, logical reasoning, and complex analysis.

Role-Based Instructions

Have the AI adopt a specific perspective or expertise level.

Example: "You are an experienced contract lawyer. Explain the key risks in this agreement."

This changes the depth, tone, and focus of the response.

Format Specification

Define exactly how you want the output structured.

Examples:

"Summarize in exactly 3 bullet points"

"Respond in JSON format"

"Write as a numbered list"

Iterative Refinement

Provide feedback to improve responses through conversation. This is where things get good because after you’ve given it the first task, it understands where you want to go (roughly). From there, you and the LLM begin working together.

Process:

Make initial request

Review AI response

Ask for specific improvements

Continue refining as needed

Model-Specific Considerations

Different AI models respond better to certain approaches:

ChatGPT: Works well with explicit instructions, role-playing, and structured formats.

Claude: Responds well to conversational, step-by-step instructions and polite framing. So don’t for get your ‘please and thank you!’

Gemini: Performs best with clear structure and factual constraints.

Open-source models (Mistral, etc.): Usually prefer direct, unambiguous instructions.

Practical Examples

For Summarization

"Summarize this research paper in 5 bullet points, focusing on practical applications for software developers."

For Analysis

"Acting as a business analyst, identify the three main risks in this quarterly report and explain each in one paragraph."

For Creative Tasks

"Write a product description in the style of these examples: [provide 2-3 samples]. The tone should be professional but approachable."

For Problem-Solving

"I need to reduce server costs by 30%. Think through this step by step: analyze current usage, identify optimization opportunities, and recommend specific actions."

Quick Reference

Advanced Techniques

Combine methods: Use role instructions with few-shot examples and format constraints together.

Provide context: Include relevant background information that helps the AI understand your situation.

Specify constraints: Mention limitations, requirements, or things to avoid.

Use follow-up questions: Don't hesitate to ask for clarification or modifications.

Common Improvements

Instead of: "Analyze our P&L"

—> Try: "Review Q3 P&L performance focusing on freight cost variances vs budget. Identify the top 3 cost drivers and recommend specific actions to improve margin by 2% next quarter."

Instead of: "Help with inventory issues"

—> Try: "Analyze this 3PL warehouse inventory data for SKUs with >90 days on hand. Calculate carrying costs, identify slow-moving items, and suggest liquidation strategies. Current data shows: [paste inventory report]"

Instead of: "Explain freight costs"

—> Try: "Break down this international freight quote for shipments from Shanghai to Los Angeles. Compare ocean vs air options, explain surcharges, and calculate total landed cost including duties for a 40ft container of electronics."

The key is pretty simple (not always easy). Be specific about what you want, why you want it, and how you want it delivered.

Sources:

Brown et al. (2020). Language Models are Few-Shot Learners. arXiv

Wei et al. (2022). Chain-of-Thought Prompting Elicits Reasoning in Large Language Models. arXiv

Kojima et al. (2022). Large Language Models are Zero-Shot Reasoners. arXiv

Chowdhery et al. (2022). PaLM: Scaling Language Modeling with Pathways. arXiv

Stiennon et al. (2020). Learning to Summarize with Human Feedback. OpenAI

Bai et al. (2022). Constitutional AI: Harmlessness from AI Feedback. arXiv